一丶安装

1、安装包下载:

elastic产品下载地址:https://www.elastic.co/cn/downloads/

elasticsearch:https://www.elastic.co/cn/downloads/elasticsearch

kibana:https://www.elastic.co/cn/downloads/kibana

logstash:https://artifacts.elastic.co/cn/downloads/logstash

二丶部署

1. 部署elasticsearch

# 把下载好的包放到/usr/local/下,并跳转

cd /usr/local

# 解压文件

tar -zxvf elasticsearch-7.6.2-linux-x86_64.tar.gz

# 创建新的账号(出于安全考虑es默认是不允许使用root账号的)

useradd es

# 设置密码

passwd es

# 创建data文件和logs文件夹

mkdir -p /home/es/elasticsearch/data

mkdir -p /home/es/elasticsearch/logs

# 给文件授权

chmod -R 777 /home/es/elasticsearch

chmod -R 777 /usr/local/elasticsearch-7.6.2

# 进入elasticsearch-7.6.2/config文件夹

cd /usr/local/elasticsearch-7.6.2/config

# 设置elasticsearch.yml

vim elasticsearch.yml

>>

http.cors.enabled: true

http.cors.allow-origin: "*"

network.host: 0.0.0.0

cluster.name: laokou-elasticsearch #可自定义

node.name: node-elasticsearch #可自定义

http.port: 9200

cluster.initial_master_nodes: ["node-elasticsearch"] #这里就是node.name

path.data: /home/es/elasticsearch/data # 数据目录位置

path.logs: /home/es/elasticsearch/logs # 日志目录位置

>>

# 设置jvm.option(默认是1g)

vim jvm.options

>>

-Xms512m

-Xmx512m

>>

#运行报错

报错信息:max virtual memory areas vm.max_map_count [65530] is too low, increase to at least [262144]

vim /etc/sysctl.conf

>>

vm.max_map_count = 655360

>>

# 设置好后刷新

sysctl -p

# 设置limlts.conf

vim /etc/security/limits.conf

>>

es soft nofile 65535

es hard nofile 65537

>>

# 用新账号启动

su es

# 运行es

cd /usr/local/elasticsearch-7.6.2/bin

./elasticsearch

# 启动http://localhost:9200,如果有数据返回则成功

设置启动脚本

>>

nohup ./bin/elasticsearch > nohup.out 2>&1 &

>>

# 报错问题及解决方案

1. 启动jdk版本警告(es最低jdk1.8)

>>

future versions of Elasticsearch will require Java 11; your Java version from [/data/Java/jdk1.8.0_291/jre] does not meet this requirement

>>

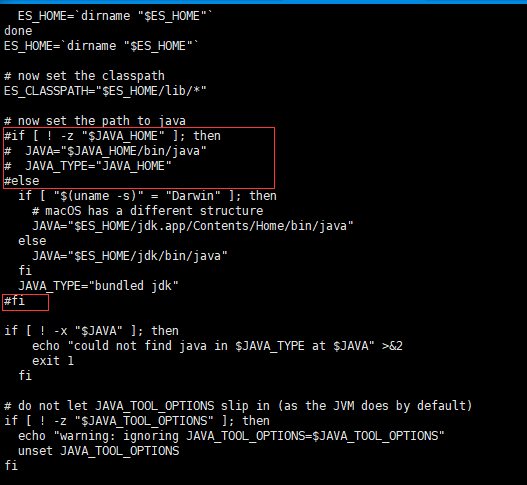

解决方案:

修改elasticsearch安装目录/bin/elasticsearch-env 文件,注释掉以下代码部分即可解决!

安装ik分词器

su root

mkdir -p /usr/local/elasticsearch-7.6.2/plugins/analysis-synonym

mkdir -p /usr/local/elasticsearch-7.6.2/plugins/analysis-ik

mkdir -p /usr/local/elasticsearch-7.6.2/plugins/analysis-pinyin

yum install -y unzip zip

unzip -d /usr/local/elasticsearch-7.6.2/plugins/analysis-ik /opt/elasticsearch-analysis-ik-7.6.2.zip

unzip -d /usr/local/elasticsearch-7.6.2/plugins/analysis-pinyin /opt/elasticsearch-analysis-pinyin-7.6.2.zip

unzip -d /usr/local/elasticsearch-7.6.2/plugins/analysis-synonym /opt/elasticsearch-analysis-dynamic-synonym-7.6.2.zip

2. 部署kibana

# 把安装包放到/usr/local下,并且解压

cd /usr/local/

tar -zxvf kibana-7.6.2-linux-x86_64.tar.gz

# 进入kibana目录下面

cd kibana-7.6.2-linux-x86_64/

# 编辑配置文件

vim config/kibana.yml

# 添加一下内容,也可以自己找找放开注释

service.port: 5601

service.host: "0.0.0.0"

elasticsearch.hosts: ["http://localhost:9200"]

# 启动kibana

./bin/kibana

# 进入页面localhost:5601

#设置启动脚本

>>

nohup ./bin/kibana > nohup.out 2>&1 &

>>3.部署logstash

# 下载包到/usr/local/

wget https://artifacts.elastic.co/downloads/logstash/logstash-7.6.2.tar.gz

# 解压

tar -zxvf logstash-7.6.2.tar.gz

# 创建logstash.conf文件到config下

touch config/logstash.conf

vi config/logstash.conf

# 根据具体业务来编写脚本

>>

input {

tcp {

mode => "server"

host => "0.0.0.0"

port => 4560

codec => json_lines

type => "debug"

}

tcp {

mode => "server"

host => "0.0.0.0"

port => 4561

codec => json_lines

type => "error"

}

tcp {

mode => "server"

host => "0.0.0.0"

port => 4562

codec => json_lines

type => "business"

}

tcp {

mode => "server"

host => "0.0.0.0"

port => 4563

codec => json_lines

type => "record"

}

}

filter{

if [type] == "record" {

mutate {

remove_field => "port"

remove_field => "host"

remove_field => "@version"

}

json {

source => "message"

remove_field => ["message"]

}

}

}

output {

elasticsearch {

hosts => "localhost:9200"

index => "sjzdry-%{type}-%{+YYYY.MM.dd}"

}

}

>>

# 启动程序

cd ../

./bin/logstash -f ./config/logstash.conf三丶springboot集成elk

1.配置springboot脚本

在项目的资源文件夹下添加logback-spring.xml脚本

<?xml version="1.0" encoding="UTF-8"?>

<!DOCTYPE configuration>

<configuration>

<!--引用默认日志配置-->

<include resource="org/springframework/boot/logging/logback/defaults.xml"/>

<!--使用默认的控制台日志输出实现-->

<include resource="org/springframework/boot/logging/logback/console-appender.xml"/>

<!--应用名称-->

<springProperty scope="context" name="APP_NAME" source="spring.application.name" defaultValue="springBoot"/>

<!--日志文件保存路径-->

<property name="LOG_FILE_PATH" value="${LOG_FILE:-${LOG_PATH:-${LOG_TEMP:-${java.io.tmpdir:-/tmp}}}/logs}"/>

<!--LogStash访问host-->

<springProperty name="LOG_STASH_HOST" scope="context" source="logstash.host" defaultValue="localhost"/>

<!--DEBUG日志输出到文件-->

<appender name="FILE_DEBUG"

class="ch.qos.logback.core.rolling.RollingFileAppender">

<!--输出DEBUG以上级别日志-->

<filter class="ch.qos.logback.classic.filter.ThresholdFilter">

<level>DEBUG</level>

</filter>

<encoder>

<!--设置为默认的文件日志格式-->

<pattern>${FILE_LOG_PATTERN}</pattern>

<charset>UTF-8</charset>

</encoder>

<rollingPolicy class="ch.qos.logback.core.rolling.SizeAndTimeBasedRollingPolicy">

<!--设置文件命名格式-->

<fileNamePattern>${LOG_FILE_PATH}/debug/${APP_NAME}-%d{yyyy-MM-dd}-%i.log</fileNamePattern>

<!--设置日志文件大小,超过就重新生成文件,默认10M-->

<maxFileSize>${LOG_FILE_MAX_SIZE:-10MB}</maxFileSize>

<!--日志文件保留天数,默认30天-->

<maxHistory>${LOG_FILE_MAX_HISTORY:-30}</maxHistory>

</rollingPolicy>

</appender>

<!--ERROR日志输出到文件-->

<appender name="FILE_ERROR"

class="ch.qos.logback.core.rolling.RollingFileAppender">

<!--只输出ERROR级别的日志-->

<filter class="ch.qos.logback.classic.filter.LevelFilter">

<level>ERROR</level>

<onMatch>ACCEPT</onMatch>

<onMismatch>DENY</onMismatch>

</filter>

<encoder>

<!--设置为默认的文件日志格式-->

<pattern>${FILE_LOG_PATTERN}</pattern>

<charset>UTF-8</charset>

</encoder>

<rollingPolicy class="ch.qos.logback.core.rolling.SizeAndTimeBasedRollingPolicy">

<!--设置文件命名格式-->

<fileNamePattern>${LOG_FILE_PATH}/error/${APP_NAME}-%d{yyyy-MM-dd}-%i.log</fileNamePattern>

<!--设置日志文件大小,超过就重新生成文件,默认10M-->

<maxFileSize>${LOG_FILE_MAX_SIZE:-10MB}</maxFileSize>

<!--日志文件保留天数,默认30天-->

<maxHistory>${LOG_FILE_MAX_HISTORY:-30}</maxHistory>

</rollingPolicy>

</appender>

<!--DEBUG日志输出到LogStash-->

<appender name="LOG_STASH_DEBUG" class="net.logstash.logback.appender.LogstashTcpSocketAppender">

<filter class="ch.qos.logback.classic.filter.ThresholdFilter">

<level>DEBUG</level>

</filter>

<destination>${LOG_STASH_HOST}:4560</destination>

<encoder charset="UTF-8" class="net.logstash.logback.encoder.LoggingEventCompositeJsonEncoder">

<providers>

<timestamp>

<timeZone>Asia/Shanghai</timeZone>

</timestamp>

<!--自定义日志输出格式-->

<pattern>

<pattern>

{

"project": "mall-swarm",

"level": "%level",

"service": "${APP_NAME:-}",

"pid": "${PID:-}",

"thread": "%thread",

"class": "%logger",

"message": "%message",

"stack_trace": "%exception{20}"

}

</pattern>

</pattern>

</providers>

</encoder>

<!--当有多个LogStash服务时,设置访问策略为轮询-->

<connectionStrategy>

<roundRobin>

<connectionTTL>5 minutes</connectionTTL>

</roundRobin>

</connectionStrategy>

</appender>

<!--ERROR日志输出到LogStash-->

<appender name="LOG_STASH_ERROR" class="net.logstash.logback.appender.LogstashTcpSocketAppender">

<filter class="ch.qos.logback.classic.filter.LevelFilter">

<level>ERROR</level>

<onMatch>ACCEPT</onMatch>

<onMismatch>DENY</onMismatch>

</filter>

<destination>${LOG_STASH_HOST}:4561</destination>

<encoder charset="UTF-8" class="net.logstash.logback.encoder.LoggingEventCompositeJsonEncoder">

<providers>

<timestamp>

<timeZone>Asia/Shanghai</timeZone>

</timestamp>

<!--自定义日志输出格式-->

<pattern>

<pattern>

{

"project": "mall-swarm",

"level": "%level",

"service": "${APP_NAME:-}",

"pid": "${PID:-}",

"thread": "%thread",

"class": "%logger",

"message": "%message",

"stack_trace": "%exception{20}"

}

</pattern>

</pattern>

</providers>

</encoder>

<!--当有多个LogStash服务时,设置访问策略为轮询-->

<connectionStrategy>

<roundRobin>

<connectionTTL>5 minutes</connectionTTL>

</roundRobin>

</connectionStrategy>

</appender>

<!--业务日志输出到LogStash-->

<appender name="LOG_STASH_BUSINESS" class="net.logstash.logback.appender.LogstashTcpSocketAppender">

<destination>${LOG_STASH_HOST}:4562</destination>

<encoder charset="UTF-8" class="net.logstash.logback.encoder.LoggingEventCompositeJsonEncoder">

<providers>

<timestamp>

<timeZone>Asia/Shanghai</timeZone>

</timestamp>

<!--自定义日志输出格式-->

<pattern>

<pattern>

{

"project": "mall-swarm",

"level": "%level",

"service": "${APP_NAME:-}",

"pid": "${PID:-}",

"thread": "%thread",

"class": "%logger",

"message": "%message",

"stack_trace": "%exception{20}"

}

</pattern>

</pattern>

</providers>

</encoder>

<!--当有多个LogStash服务时,设置访问策略为轮询-->

<connectionStrategy>

<roundRobin>

<connectionTTL>5 minutes</connectionTTL>

</roundRobin>

</connectionStrategy>

</appender>

<!--接口访问记录日志输出到LogStash-->

<appender name="LOG_STASH_RECORD" class="net.logstash.logback.appender.LogstashTcpSocketAppender">

<destination>${LOG_STASH_HOST}:4563</destination>

<encoder charset="UTF-8" class="net.logstash.logback.encoder.LoggingEventCompositeJsonEncoder">

<providers>

<timestamp>

<timeZone>Asia/Shanghai</timeZone>

</timestamp>

<!--自定义日志输出格式-->

<pattern>

<pattern>

{

"project": "mall-swarm",

"level": "%level",

"service": "${APP_NAME:-}",

"class": "%logger",

"message": "%message"

}

</pattern>

</pattern>

</providers>

</encoder>

<!--当有多个LogStash服务时,设置访问策略为轮询-->

<connectionStrategy>

<roundRobin>

<connectionTTL>5 minutes</connectionTTL>

</roundRobin>

</connectionStrategy>

</appender>

<!--控制框架输出日志-->

<logger name="org.slf4j" level="INFO"/>

<logger name="springfox" level="INFO"/>

<logger name="io.swagger" level="INFO"/>

<logger name="org.springframework" level="INFO"/>

<logger name="org.hibernate.validator" level="INFO"/>

<logger name="com.alibaba.nacos.client.naming" level="INFO"/>

<root level="DEBUG">

<appender-ref ref="CONSOLE"/>

<appender-ref ref="FILE_DEBUG"/>

<appender-ref ref="FILE_ERROR"/>

<appender-ref ref="LOG_STASH_DEBUG"/>

<appender-ref ref="LOG_STASH_ERROR"/>

</root>

<logger name="com.macro.mall.common.log.WebLogAspect" level="DEBUG">

<appender-ref ref="LOG_STASH_RECORD"/>

</logger>

<logger name="com.macro.mall" level="DEBUG">

<appender-ref ref="LOG_STASH_BUSINESS"/>

</logger>

</configuration>运行就行!

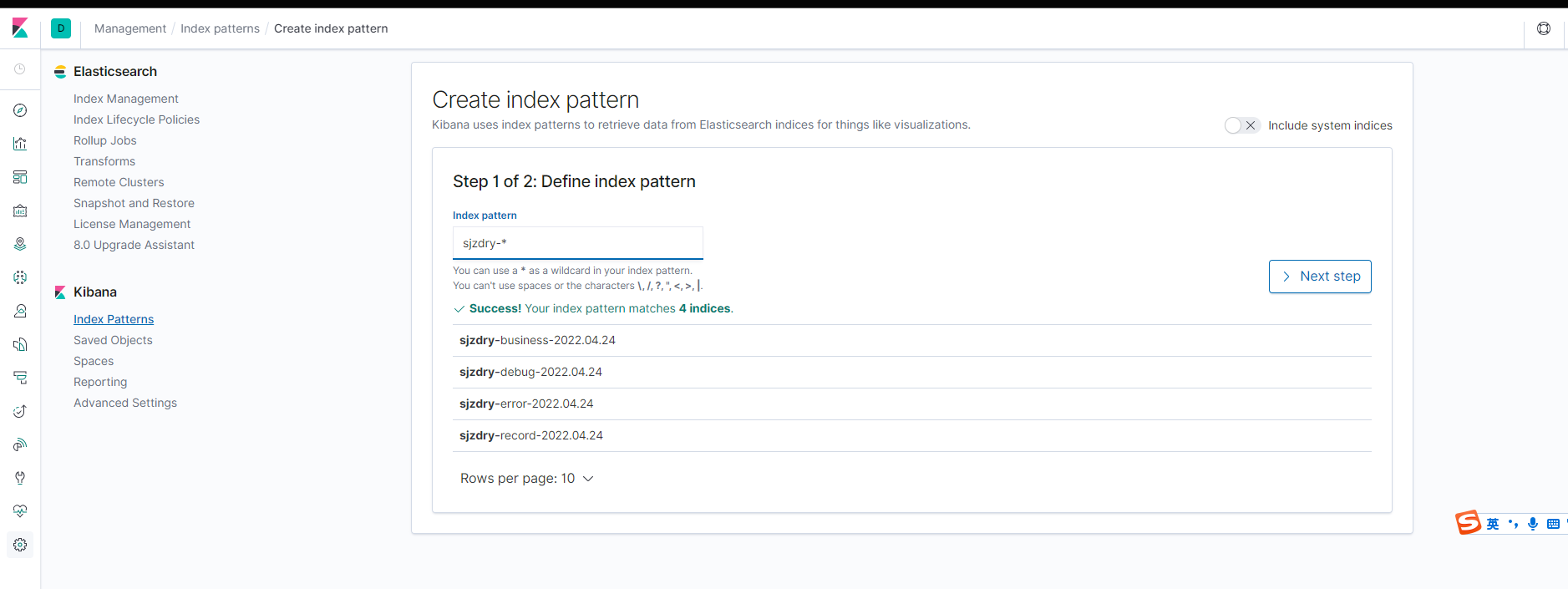

2.配置kibana查询日志

第一步进入kibana,模糊搜索一下你的日志索引

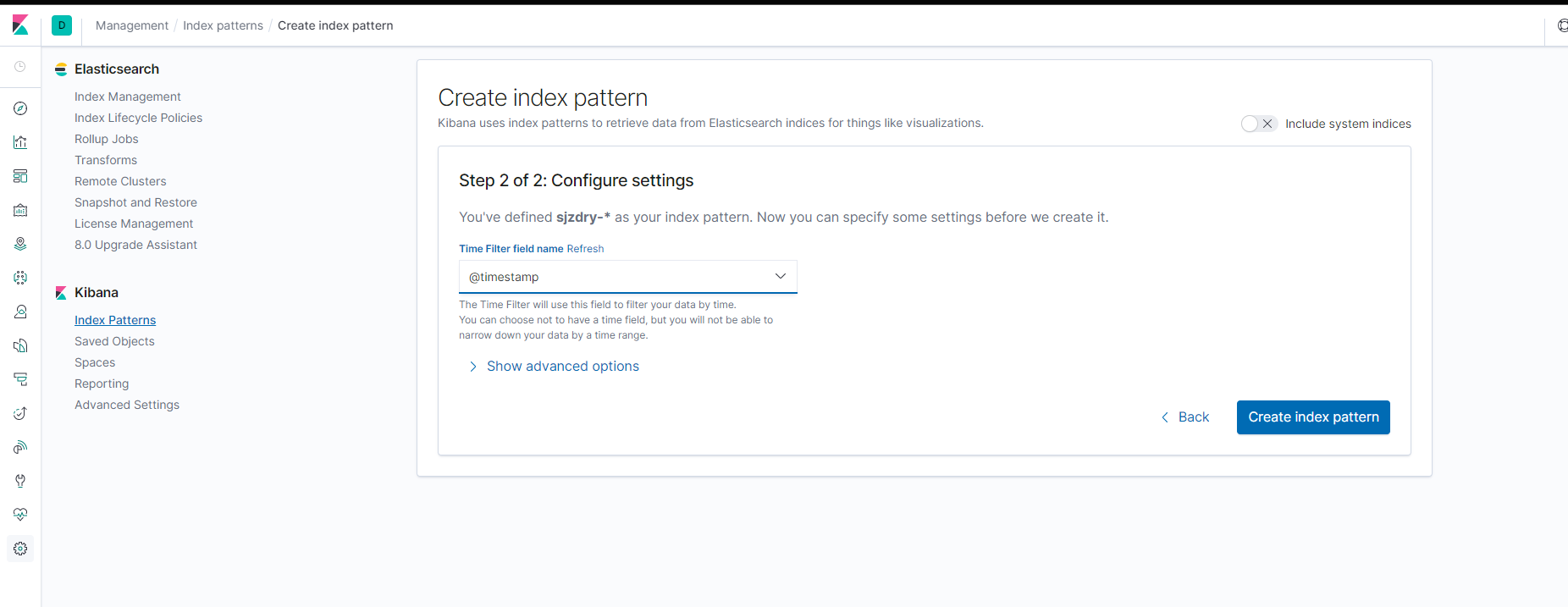

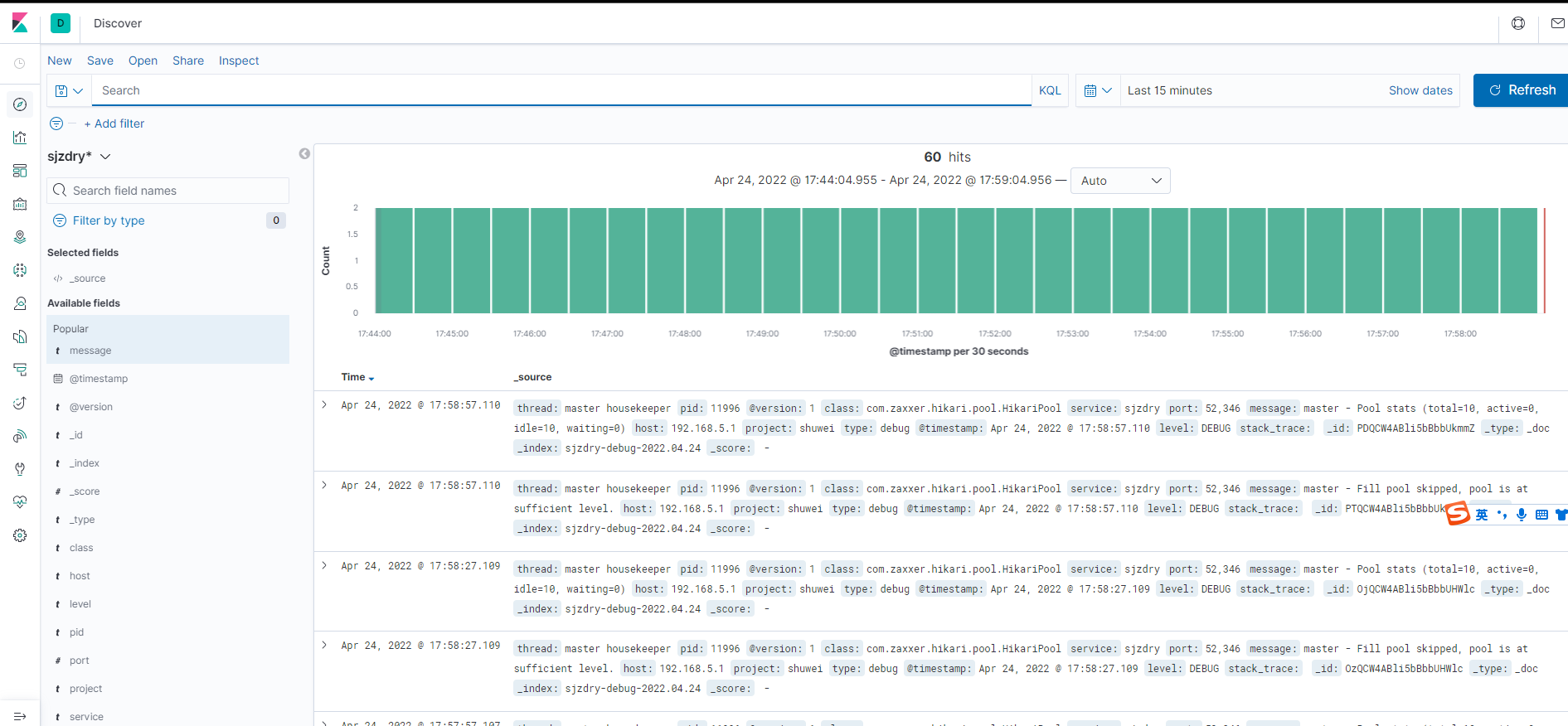

选择日期格式,点击创建刷新一下就行

完成

默认评论

Halo系统提供的评论